Enhancing Kubernetes with ROCE

A Performance Analysis of RDMA over Converged Ethernet for Container Orchestration Systems

RDMA over Converged Ethernet (RoCE) is a technology that combines the benefits of Remote Direct Memory Access (RDMA) and Ethernet networking to enable high-speed, low-latency communication between nodes in a Kubernetes (K8s) cluster. This can significantly enhance performance for workloads that are bandwidth-intensive or latency-sensitive, such as machine learning, high-performance computing (HPC), and distributed databases.

Here's how RoCE for Kubernetes works, its benefits, and considerations for implementation:

What is RoCE?

Remote Direct Memory Access (RDMA): A networking technology that enables direct memory access from one computer to another without involving the operating system or CPU, minimizing latency and CPU overhead.

Converged Ethernet:

Uses standard Ethernet as the transport medium for RDMA traffic, allowing RDMA to operate over existing Ethernet infrastructures. RoCE comes in two versions:

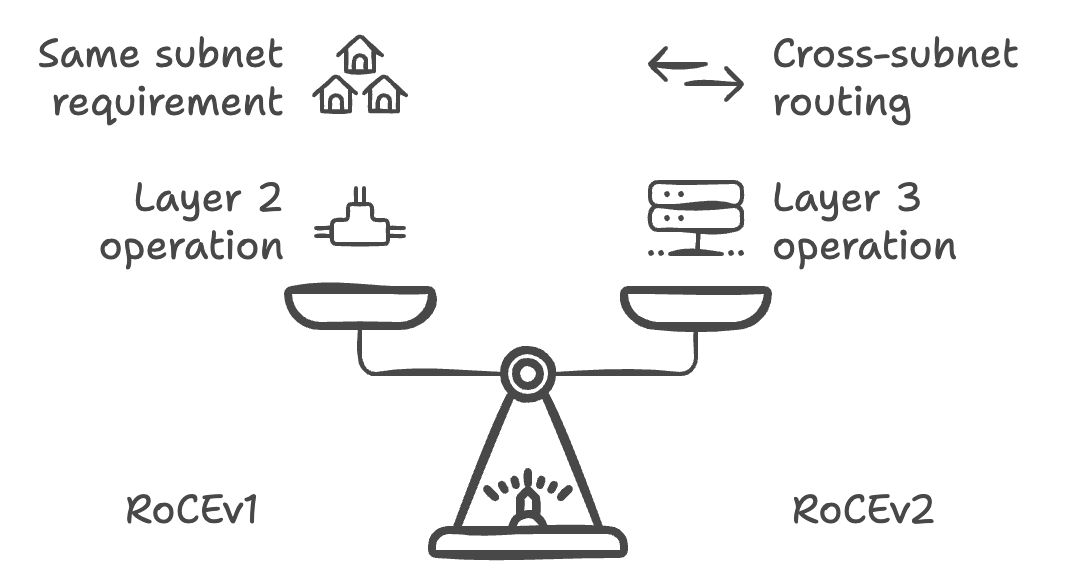

RoCEv1: Works on Layer 2 (data link layer), requiring devices to be in the same subnet.

RoCEv2: Operates on Layer 3 (network layer), allowing routing across subnets, making it more suitable for large-scale Kubernetes clusters.

Why Use RoCE in Kubernetes?

RoCE is beneficial in Kubernetes clusters for specific workloads requiring high throughput, low latency, and minimal CPU involvement.

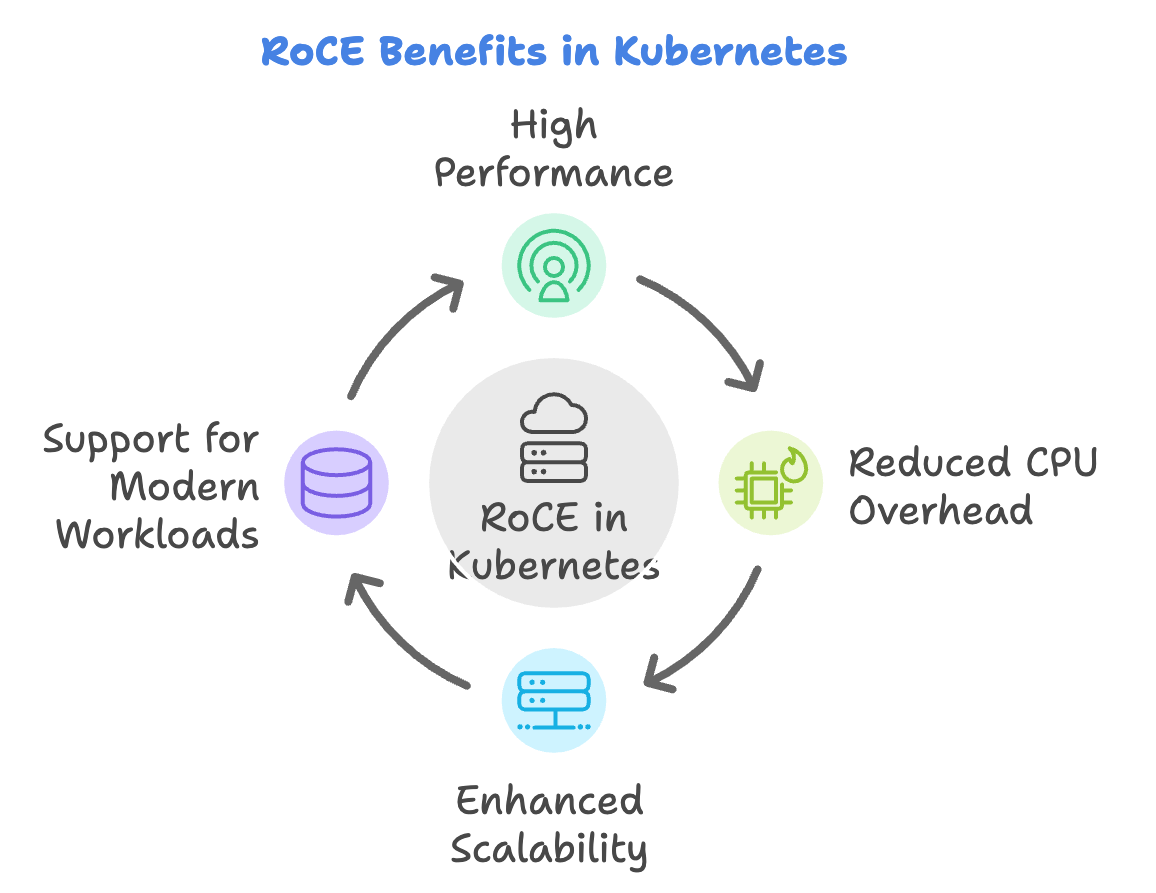

High Performance: RoCE bypasses the TCP/IP stack and enables direct communication between pods or nodes with very low latency. Suitable for performance-critical workloads such as AI/ML training, financial modeling, or large-scale analytics.

Reduced CPU Overhead: Traditional networking protocols consume significant CPU cycles. RoCE reduces this load, freeing the CPU for application-level processing.

Enhanced Scalability: RoCEv2 allows seamless scaling across subnets, which is essential for large, distributed Kubernetes clusters.

Support for Modern Workloads: RoCE accelerates storage and data-centric workloads such as NVMe-over-Fabrics (NVMe-oF), distributed file systems, and message-passing frameworks like MPI.

Setting Up RoCE in Kubernetes

To implement RoCE in a Kubernetes environment, follow these steps

Prerequisites

Hardware Support: Network Interface Cards (NICs) with RDMA support (e.g., Mellanox ConnectX series). Ethernet switches with support for Priority Flow Control (PFC) and Enhanced Transmission Selection (ETS) for reliable RoCE traffic.

Software Stack: RDMA drivers installed on all nodes (e.g., Mellanox OFED or RDMA-Core). Kubernetes cluster with Container Network Interface (CNI) plugin capable of handling RoCE traffic (e.g., Multus CNI).

Configure the Network for RoCE

Enable RoCE on NICs: Configure the NICs for RDMA and RoCEv2 using tools like ethtool or vendor-specific utilities. Enable PFC to prevent packet loss in the lossless Ethernet environment required for RoCE.

Set up VLANs or Subnets: Use VLANs for traffic segmentation and Quality of Service (QoS). Configure Layer 3 routing for RoCEv2 to enable communication across subnets.Deploy Kubernetes with RDMA Support

CNI Plugin: Use a CNI plugin such as Multus to enable multiple network interfaces, separating RDMA traffic from regular network traffic.

RDMA Device Plugin: Deploy an RDMA device plugin (e.g., NVIDIA GPU Operator or Mellanox RDMA Kubernetes plugin) to expose RDMA capabilities to pods. This allows pods to use RDMA-capable interfaces for high-performance communication.

Pod Annotations: Use Kubernetes annotations to bind specific workloads to the RDMA interface.Test RoCE Connectivity: Verify RDMA connectivity between nodes using tools like ibping or rping. Test the performance improvement for your application with and without RoCE to ensure it meets the desired benchmarks.

Optimize Kubernetes for RoCE

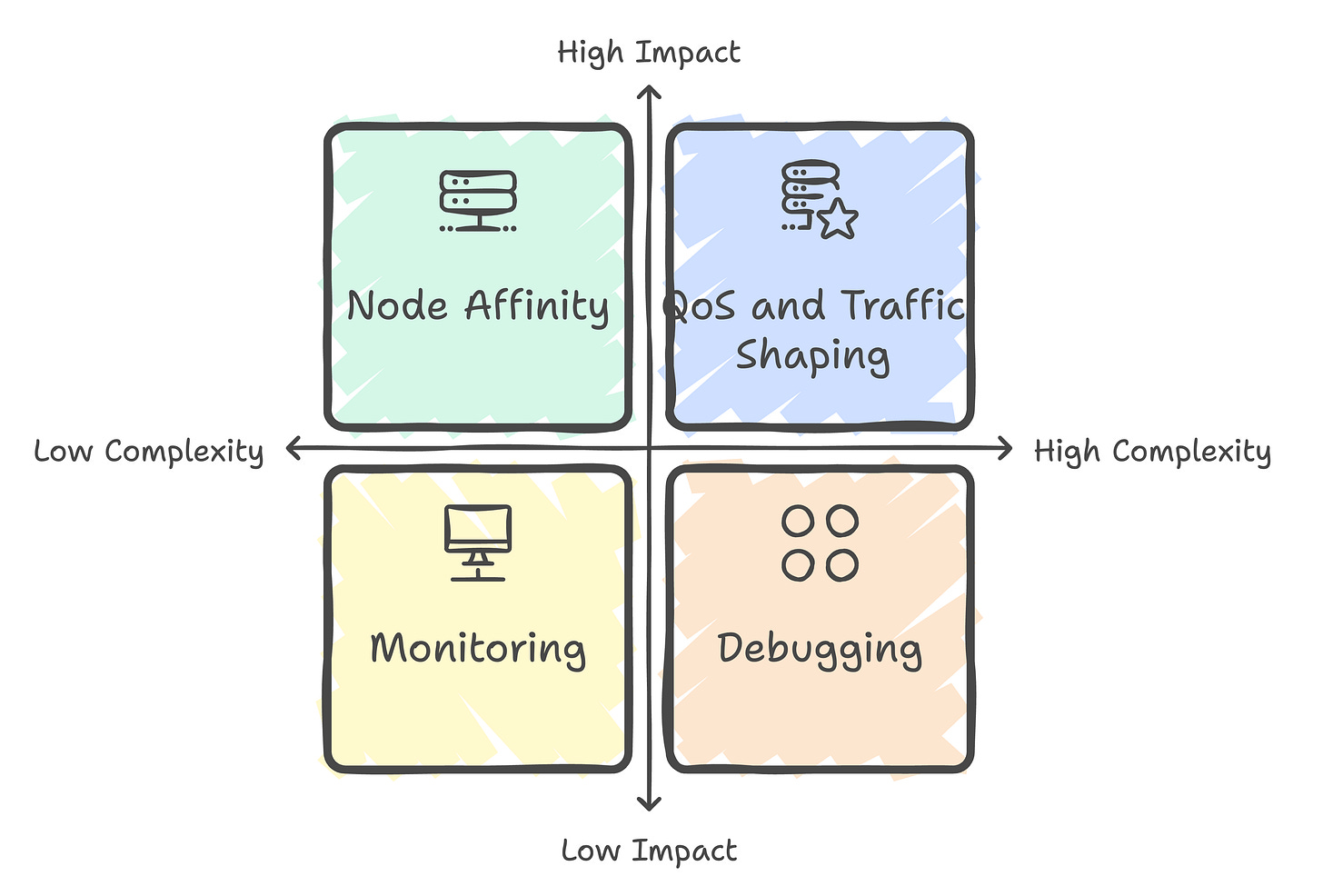

Node Affinity: Use node affinity to schedule pods requiring RDMA to nodes with RDMA-enabled NICs.

QoS and Traffic Shaping: Configure Kubernetes QoS classes to ensure critical workloads have priority access to RDMA resources.

Monitoring and Debugging: Monitor RDMA traffic and performance using tools like ibstat, Prometheus metrics, or the Kubernetes dashboard.

Use Cases for RoCE in Kubernetes

Machine Learning (ML) Training: Accelerates GPU-to-GPU communication and parameter synchronization.

High-Performance Computing (HPC): Enhances performance for MPI-based distributed computing workloads.

Distributed Storage Systems: Boosts performance for NVMe-over-Fabrics (NVMe-oF) and Ceph RDMA clusters.

Low-Latency Databases: Improves response times for databases like Redis or Cassandra in high-throughput scenarios.

Challenges and Considerations

Hardware Costs: Requires RDMA-capable NICs and switches, which may increase infrastructure costs.

Configuration Complexity: Setting up lossless Ethernet with PFC and ETS can be challenging.

Software Stack Maturity: RDMA Kubernetes plugins and CNIs are improving but might have limited support for some use cases.

Fault Tolerance: RoCE requires careful configuration to handle packet loss and congestion, especially in large clusters.

Conclusion

RDMA over Converged Ethernet (RoCE) can significantly enhance Kubernetes clusters for workloads requiring high bandwidth and low latency, such as AI/ML, HPC, and storage systems. By integrating RoCE with Kubernetes through RDMA plugins and appropriate CNI configurations, you can achieve substantial performance gains. However, it requires careful planning, specialized hardware, and ongoing management to ensure optimal performance and scalability.